Social media companies and the upcoming Congressional hearing

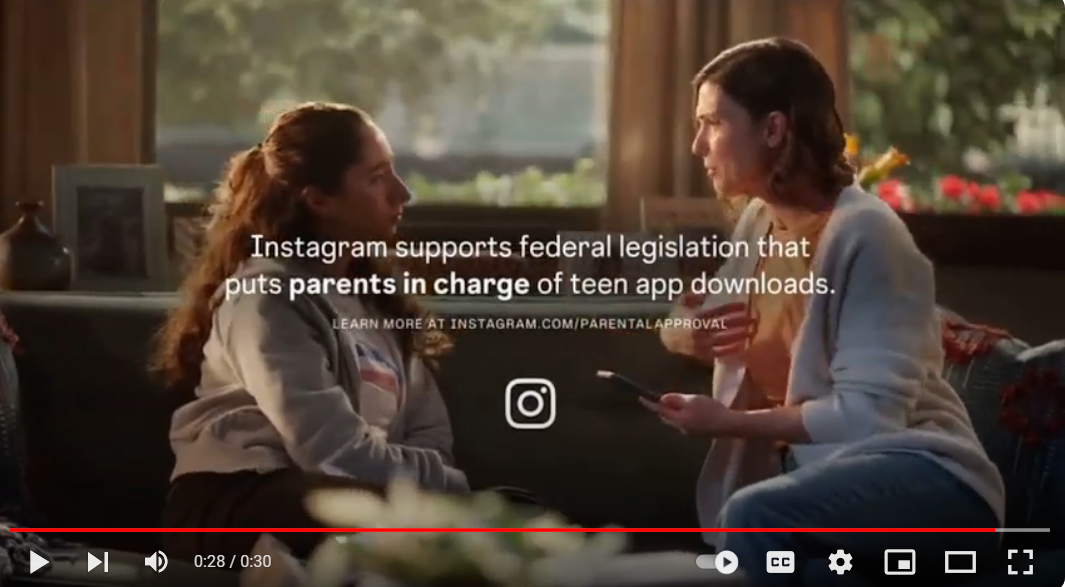

Have you seen the new Instagram ad about Instagram wanting to work with Congress so parents approve which apps their kids download?

Seems like a good idea, right?

In fact, why hasn’t it always been the case, that parents can control which apps kids have access to? Seems logical.

Maybe you've seen this ad and wondered - What's behind this newfound desire to help parents supervise their kids on social media?

It's another attempt to muddy the waters. Another bait and switch effort by Big Tech.

Letting parents approve apps will do nothing for the horribly inappropriate content that is all over mainstream apps like TikTok and Instagram.

Don’t get me wrong. We should allow parents to select the apps kids can access. But that’s a whole different issue than what Big Tech doesn’t want you to focus on - the algorithms, features and content that are hurting children and teens right now.

The timeline is suspect

Meta announced this commitment to parents choosing the apps kids can have on November 15th, 2023.

Just a week before that, Arturo Bejar, Meta whistleblower, appeared before Congress (November 7th, 2023). His testimony is important because when he worked at Meta, he reported to senior executives at both Instagram and Facebook and he worked on safety initiatives at both companies. He knows stuff!

Meta’s “commitment” is in direct response to Bejar highlighting the safety gaps that have gotten worse over time at Facebook and Instagram. It’s a bandaid, a distraction.

Want to know another reason Meta is posturing?

The spotlight will be shining on all the Big Tech companies at the end of January.

We're gearing up for a major event in Washington DC on January 31st. There's a Senate Judiciary Committee hearing on online child sexual exploitation and tech execs will be there. (Some of them volunteered and some were subpoenaed.) It’s been awhile since we’ve seen Big Tech CEOs in a hearing and all eyes will be on them, as they try to explain why are kids still are not protected on their social media products.

The Senate Judiciary Committee will hear from the following five CEOs of Big Tech companies:

Mark Zuckerberg, CEO of Meta

Linda Yaccarino, CEO of X (formerly Twitter)

Shou Zi Chew, CEO of TikTok

Evan Spiegel, CEO of Snap

Jason Citron, CEO of Discord

Dozens of grieving parents are expected to be sitting right behind these CEOs during the hearing and four hundred parents signed a letter urging the U.S. Senate to pass legislation to regulate Big Tech and protect their rights to hold Big Tech accountable

Other suspect annoucements

On January 11, 2024 Meta congratulated themselves by announcing “We’ve developed more than 30 tools and resources to support teens and their parents, and we’ve spent over a decade developing policies and technology to address content that breaks our rules or could be seen as sensitive.”

Why didn’t Meta list those 30 tools so we could evaluate them for ourselves?

Meta says it will

start to hide more types of content for teens on Instagram and Facebook, in line with expert guidance.

automatically place all teens into the most restrictive content control settings on Instagram and Facebook and restrict additional terms in Search on Instagram.

prompt teens to update their privacy settings on Instagram in a single tap with new notifications.

Again, why did it take this long for these basic safety features?

By the way, Meta isn’t alone.

Other platforms are scurrying around, announcing enhanced safety features and “good ideas” in advance of the hearing.

On January 11, 2024, Snapchat expanded its Family Center (the area it holds parental controls) so parents can now opt their kids out from using Snapchat’s creepy AI chatbot. (Why wasn’t this always in place???)

Huge news - On January 25 Snap (Snapchat) did a 180 and separated itself from the Big Tech alliance by declaring that it now supports the Kids Online Safety Act (KOSA), which has been stuck in Congress for two years.

This is the first time a major social media platform used by kids has backed KOSA. In Congress KOSA has bipartisan support and requires social media companies to provide minors with options that aim to protect their information, disable addictive product features and opt them out of algorithmic recommendations. KOSA also creates a “duty of care” for social media companies to put their young users’ well-being before other goals, to prevent and mitigate harms to minors.

Unfortunately, all of these announcements are a ruse so platforms can pretend they’re pro-protecting kids who are using their products. Their goals is actually to be able to say they’re the good guys, so Congress won’t intervene and force regulations on them.

The bottom line

Now that we’ve seen this cycle repeated of Congressional testimony from whistleblowers and parents who expose harmful content on social media, followed by bandaids and fake fixes by Big Tech companies, we need to demand Congress sets safety standards to protect our kids and teens.

Big Tech isn’t capable of doing it themselves.

We needs standards and safeguards on social media companies that allow kids to use their products.

Contact your legislators here (in one easy step) to show your support of KOSA.